Some of it has to do with CAFE standards using vehicle footprint to determine the target MPG. Some of it is because of better safety standards. Some of it is just because that’s what a certain portion of the market wants, and the profit margins on the large vehicles are higher, so they spend more money marketing them (creating more demand).

- 2 Posts

- 77 Comments

I live in Germany, … It also had a confederate flag in the back window.

WTF, I didn’t even know that was a thing outside the U.S. Do they claim “it’s our heritage not hate?”

That’s not true. Kei trucks have comparably low load and towing capacity. They have the same bed dimensions of the most common pickup truck bed size. Most people with trucks don’t hail around stone or heavy machinery though.

51·5 days ago

51·5 days agoThis seems like the most plausible explanation. Only other thing I can think of is they want to develop their own CoPilot (which I’m guessing isn’t available in China due to the U.S. AI restrictions?), and they’re just using their existing infrastructure to gather training data.

4·6 days ago

4·6 days agoI wonder what is the general use for the Mac Mini, MacBook Air, iMac, and MacBook Pro? People generally seem to do all the lightweight stuff like social media consumption on their phones; and desktops/laptops are used for the more heavy-weight stuff. The only reason I’ve ever used a Mac was for IOS development.

6·6 days ago

6·6 days agoI was looking at notebooks at Walmart the other day, and I was amazed that they almost all had less or the same amount of RAM as my phone.

12·6 days ago

12·6 days agoI think SSDs are also soldered to the mainboard on most apple products.

92·8 days ago

92·8 days ago“In an unjust society the only place for a just man is prison.”

I’ve tried a couple rolling distros (including Arch), and they always “broke” after ~6 months to a year. Both times because an update would mess up something with my proprietary GPU drivers, IIRC. Both times, I would just install a different distro, because it would’ve probably took me longer to figure out what the issue was and fix it. I’m currently just using Debian stable, lol.

I’ve never used it, but the idea is that nutrient uptake will be faster than if someone just dressed the top of the soil with compost. The extra aerobic bacteria could also be beneficial.

7·10 days ago

7·10 days agoMost CEOs are also conmen and not any smarter. It’s mostly just a nepotism racket at the executive level.

Decarboxylation happens when heat is applied (smoking or vaping, for example), turning THCA into delta-9 THC.

Delta-9 gummies are the real-deal. I can’t tell the difference between THCA flower and regular flower (I’m pretty sure it’s just uncured bud). They also make THCA concentrates.

20·26 days ago

20·26 days agoAfD is far right. They are ethno-nationalists that believe only ethnic-Germans belong in Germany. A leader has defended the Nazi SS. They have discussed re-migrating German citizens out of Germany. How do you compromise with people who would like to carry out an ethnic cleansing? Only forcibly relocate Muslims for now, and wait until next year to expel the Jewry?

Most far-right politicians do not debate or operate politically in good-faith. IDK about the people who vote for them. I think it usually takes years of slow progress for people to move away from extremist positions, and it takes a change in their environment to start the process (new social circle, life experiences, media consumption habits, etc).

35·26 days ago

35·26 days agoA lot of the “elites” (OpenAI board, Thiel, Andreessen, etc) are on the effective-accelerationism grift now. The idea is to disregard all negative effects of pursuing technological “progress,” because techno-capitalism will solve all problems. They support burning fossil fuels as fast as possible because that will enable “progress,” which will solve climate change (through geoengineering, presumably). I’ve seen some accelerationists write that it would be ok if AI destroys humanity, because it would be the next evolution of “intelligence.” I dunno if they’ve fallen for their own grift or not, but it’s obviously a very convenient belief for them.

Effective-accelerationism was first coined by Nick Land, who appears to be some kind of fascist.

33·1 month ago

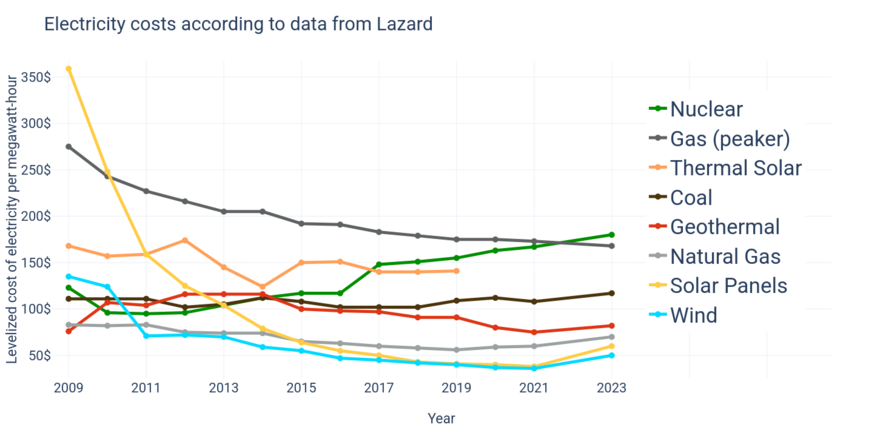

33·1 month agoIDK. Nuclear looks like it kinda sucks.

8·1 month ago

8·1 month agoThere’s this map: https://liveuamap.com/

I haven’t been following the war very closely, but AFAIK, they’ve been in a stalemate for many months with neither side making any significant gains.

31·1 month ago

31·1 month agoWe’re close to peak using current NN architectures and methods. All this started with the discovery of transformer architecture in 2017. Advances in architecture and methods have been fairly small and incremental since then. The advancements in performance has mostly just been throwing more data and compute at the models, and diminishing returns have been observed. GPT-3 costed something like $15 million to train. GPT-4 is a little better and costed something like $100 million to train. If the next model costs $1 billion to train, it will likely be a little better.

2·1 month ago

2·1 month agoLLMs do sometimes hallucinate even when giving summaries. I.e. they put things in the summaries that were not in the source material. Bing did this often the last time I tried it. In my experience, LLMs seem to do very poorly when their context is large (e.g. when “reading” large or multiple articles). With ChatGPT, it’s output seems more likely to be factually correct when it just generates “facts” from it’s model instead of “browsing” and adding articles to its context.

Yeah, a lot of the regulations are written by the industries they’re supposed to regulate.